Human-Centered AI Starts with Where Your Data Lives

Most startups fix infrastructure later. We chose European data sovereignty from day one.

Margot Lor-Lhommet

Chief Technical Officer, PhD

Human-centered AI is often reduced to conversational quality. But the most consequential decisions happen before anyone logs in: data sovereignty, infrastructure choices, jurisdictional commitments. These invisible decisions are not details. They are design.

A user opens an AI coaching app. The interface is warm, the responses thoughtful.

But where is their data stored? Under which jurisdiction? Who can access it? These questions are rarely visible. And yet, they determine what kind of trust is actually on offer.

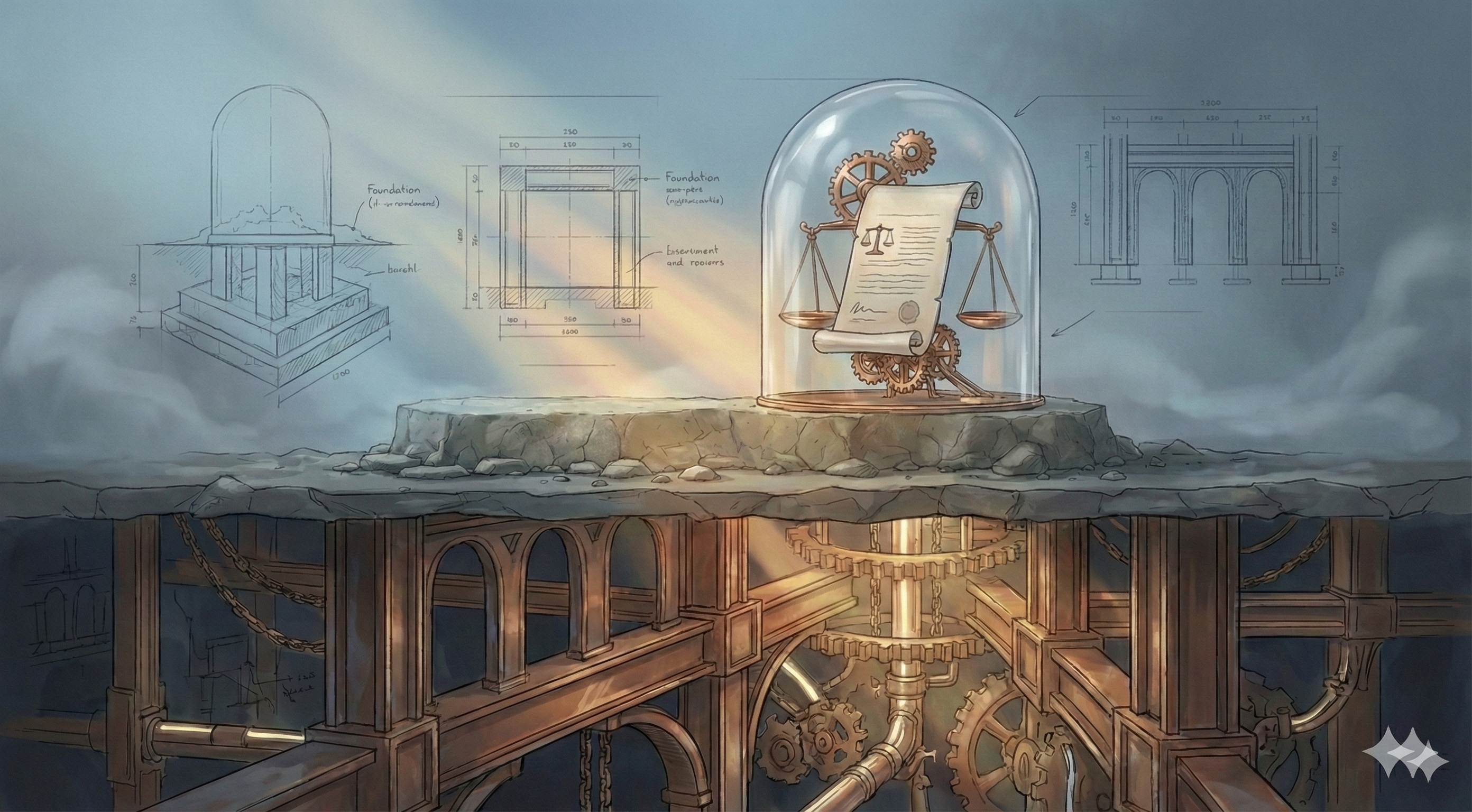

The visible and the invisible

When we talk about human-centered AI, the conversation focuses on what users see: natural language, empathetic tone, conversational flow.

But beneath lies an invisible architecture. Where servers are located. Which laws apply. What happens when a company is acquired, subpoenaed, or changes its terms of service.

When AI systems handle sensitive domains — careers, doubts, inner worlds — these invisible choices matter as much as the interface.

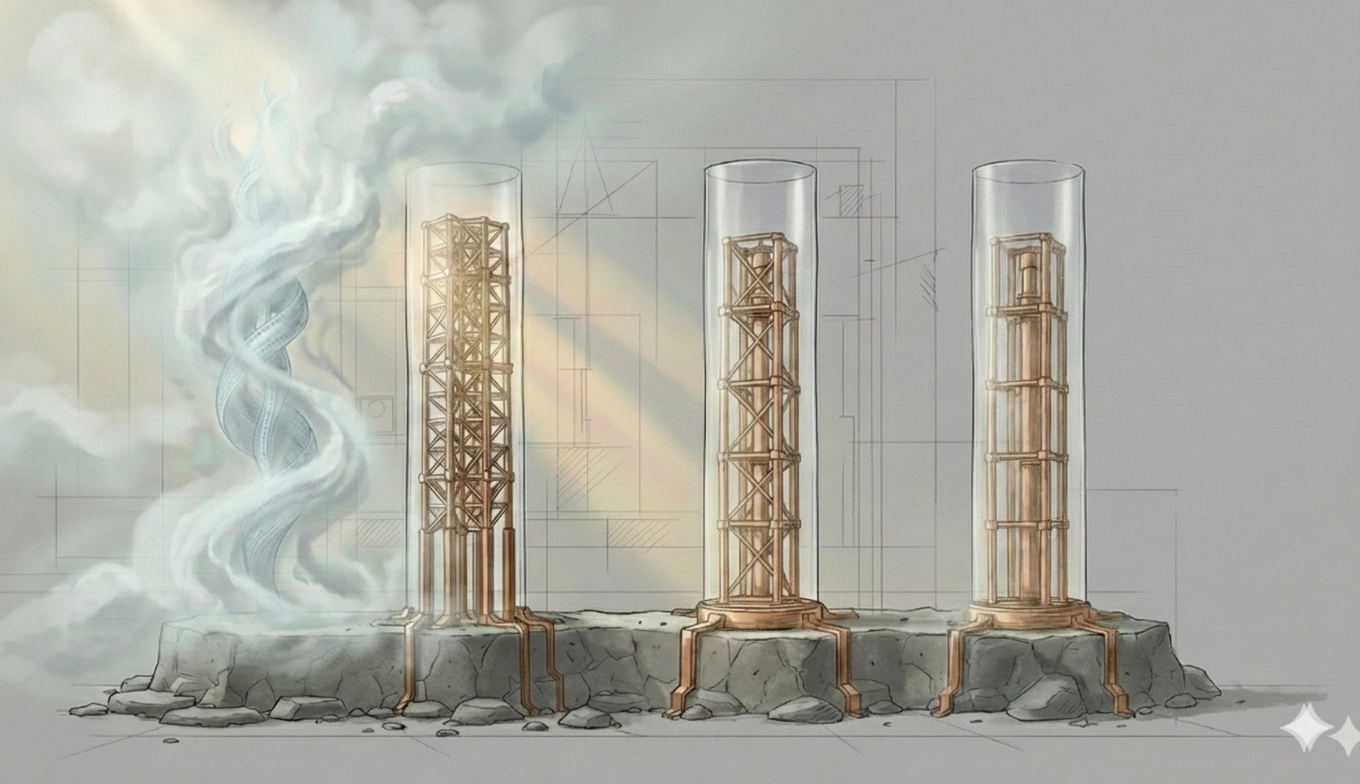

Data location is a jurisdictional choice

Where your data lives determines which legal frameworks protect it. The question is not whether it’s encrypted. The question is: what legal and institutional structures govern its existence?

The EU’s GDPR offers some of the strongest data protection rights in the world. Data stored elsewhere may be subject to different rules — or to laws allowing government access without user notification.

In today’s geopolitical climate, this is not abstract. The need for sovereign AI infrastructure — European data under European law — is no longer a niche concern. It is a strategic necessity.

The startup shortcut trap

Here’s what usually happens in early-stage startups: you move fast. You pick the easiest tools, the cheapest hosting, the quickest path to a working prototype.

Infrastructure decisions get deferred.

“We’ll fix it later.”

This is how technical debt accumulates. And data infrastructure debt is particularly insidious — it touches everything, and migrating is painful.

We made a different choice.

Before our MVP goes live, we migrated our entire data infrastructure to Europe.

Not because it was easy — it wasn’t. But because we wanted to be clean from day one. No legacy shortcuts to unwind. No retroactive privacy patches. No “we’ll deal with sovereignty later.”

When you build AI that works with people’s inner worlds, you don’t get to defer these decisions.

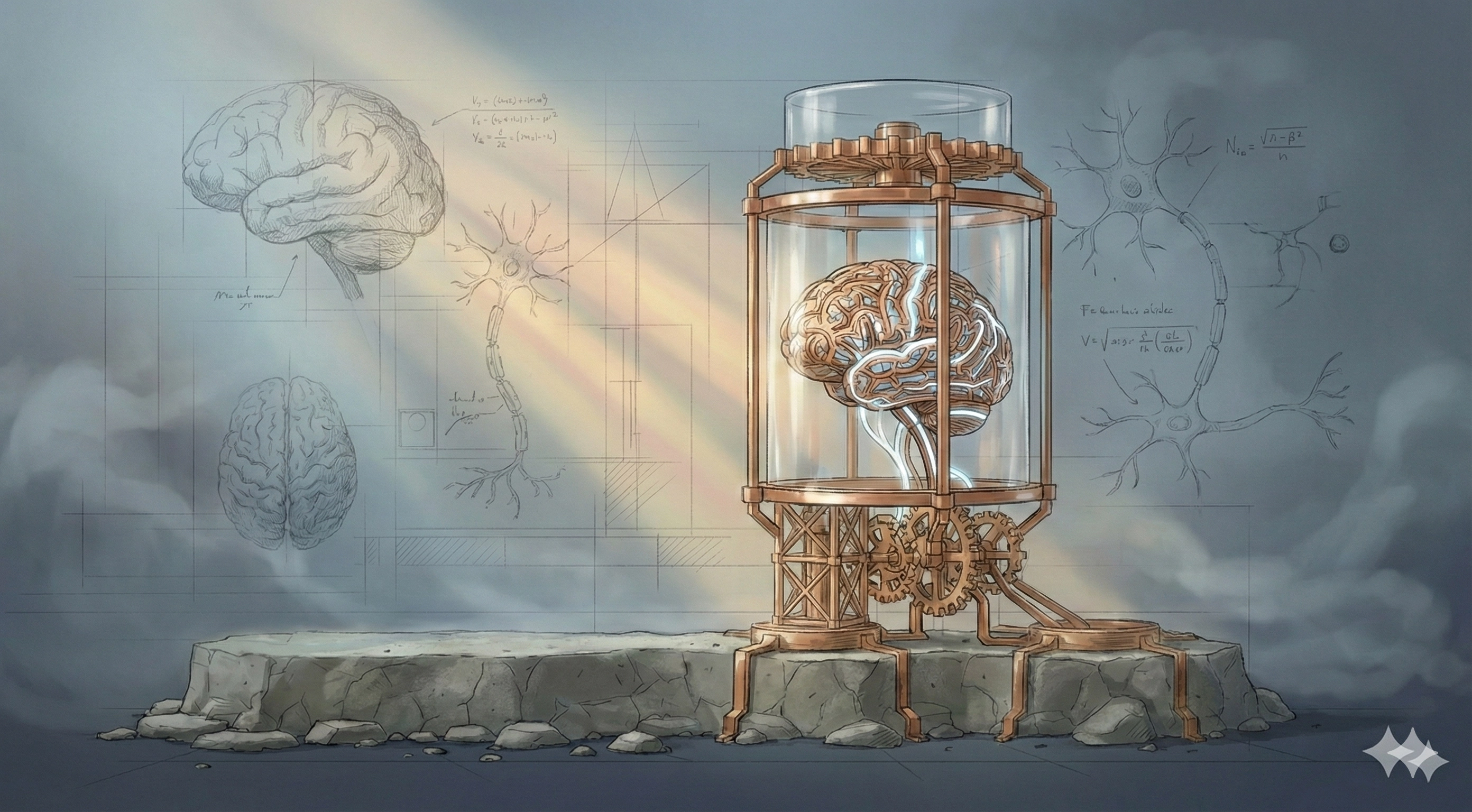

What “human-centered” actually requires

The phrase “human-centered AI” has become marketing. Everyone claims it.

Here is a definition: human-centered AI means making decisions that prioritize user wellbeing even when those decisions are invisible and costly.

European data residency is one such decision. So is building explainable architectures instead of black-box models. So is letting users see — and correct — what the AI believes about them.

The decisions no one sees

AI is increasingly present in domains involving vulnerability and personal stakes. In these domains, conversational quality is necessary but not sufficient.

What matters equally is the invisible infrastructure: governance, jurisdiction, architectural commitments made when no one is watching.

> _

Beyond Language: A Structural Foundation for Trust in AI

Is trust in AI something to be persuaded into, or something to be earned through structure and transparency?

Cognitive AI: The Forgotten Science of Modeling Human Intelligence

Before language models, AI tried to understand how we think