Theory of Mind : Why Understanding People Requires More Than Language

Theory of Mind is a form of emotional intelligence that allows us to understand others’ thoughts and navigate social situations.

Margot Lor-Lhommet

Chief Technical Officer, PhD

Simone uses a computational “Theory of Mind” to build structured mental representations of users. Not just storing conversations, but modeling goals, relationships, and beliefs. This enables personalized, explainable coaching that goes beyond what LLMs and RAG architectures can offer.

You’ve mentioned Lucy twelve times across three months of conversations. An AI can retrieve every mention of her. But can it tell that your relationship shifted two weeks ago after a team restructure, and that this unresolved tension is now affecting your confidence? That requires something most AIs don’t have: a model of who Lucy is to you.

The invisible skill behind human support

When we talk about human intelligence, we often overlook one of its most fundamental components: the ability to understand what others are thinking and to reason in a social context. Cognitive scientists call this “Theory of Mind.”

This ability is not reserved for support professionals. It is something we all do spontaneously when someone confides in us. We remember what they told us before. We keep their goals and doubts in mind. We understand the relational dynamics around them. We anticipate how a particular person might react.

Humans don’t store sentences. We build a mental representation of the other person - their reality, their relationships, their evolving situation.

This is precisely what makes deep support possible, whether it comes from a close friend, a mentor, a caring manager, or a professional coach. And this is precisely what we have built into Simone.

Can AI do this?

If understanding someone means building a structured mental model of who they are, what they want, and how they relate to others — what does that imply for AI systems designed to support people?

Most of today’s AI assistants rely on Large Language Models. And LLMs do not maintain an explicit, persistent, inspectable representation.

Their “memory” is a context window: a linear sequence of recent messages. There is no hierarchy, no structure, no distinction between an established fact and a passing hypothesis. They generate language from statistical patterns. They do not construct a mental model of the person they are talking to. This is not a flaw — it is simply not what they were designed for. LLMs are powerful tools for generating fluent, contextually relevant language. But fluency is not understanding.

Why RAG doesn’t solve the problem

Some systems attempt to work around this limitation using RAG — Retrieval-Augmented Generation.

This approach sounds promising, but remains fundamentally limited.

Semantic similarity is not contextual relevance

If you mention a problem with Lucy, the RAG will retrieve other mentions of Lucy. But it won’t know that Lucy is your manager, that your relationship deteriorated after a reorganization, or that this tension is affecting how you show up in meetings. Retrieved information will be decontextualized, and therefore, difficult to interpret.

There is no relational reasoning

Relational reasoning is not grounded in an explicit relational state; it remains implicit, fragile, and hard to keep consistent over time.

As a result, LLMs cannot reliably reason through the implications of apparently contradictory information — such as “Lucy prefers direct communication” and “your director values diplomacy” —let alone figure out what you should actually do about it

There is no temporal consistency

Similarity search doesn’t distinguish between “I’m thinking of resigning” said six months ago in a moment of frustration, and the same sentence said last week after careful deliberation. These carry very different weight in understanding where you are.

Storing conversations is not the same as modeling a person

How Simone builds her representation

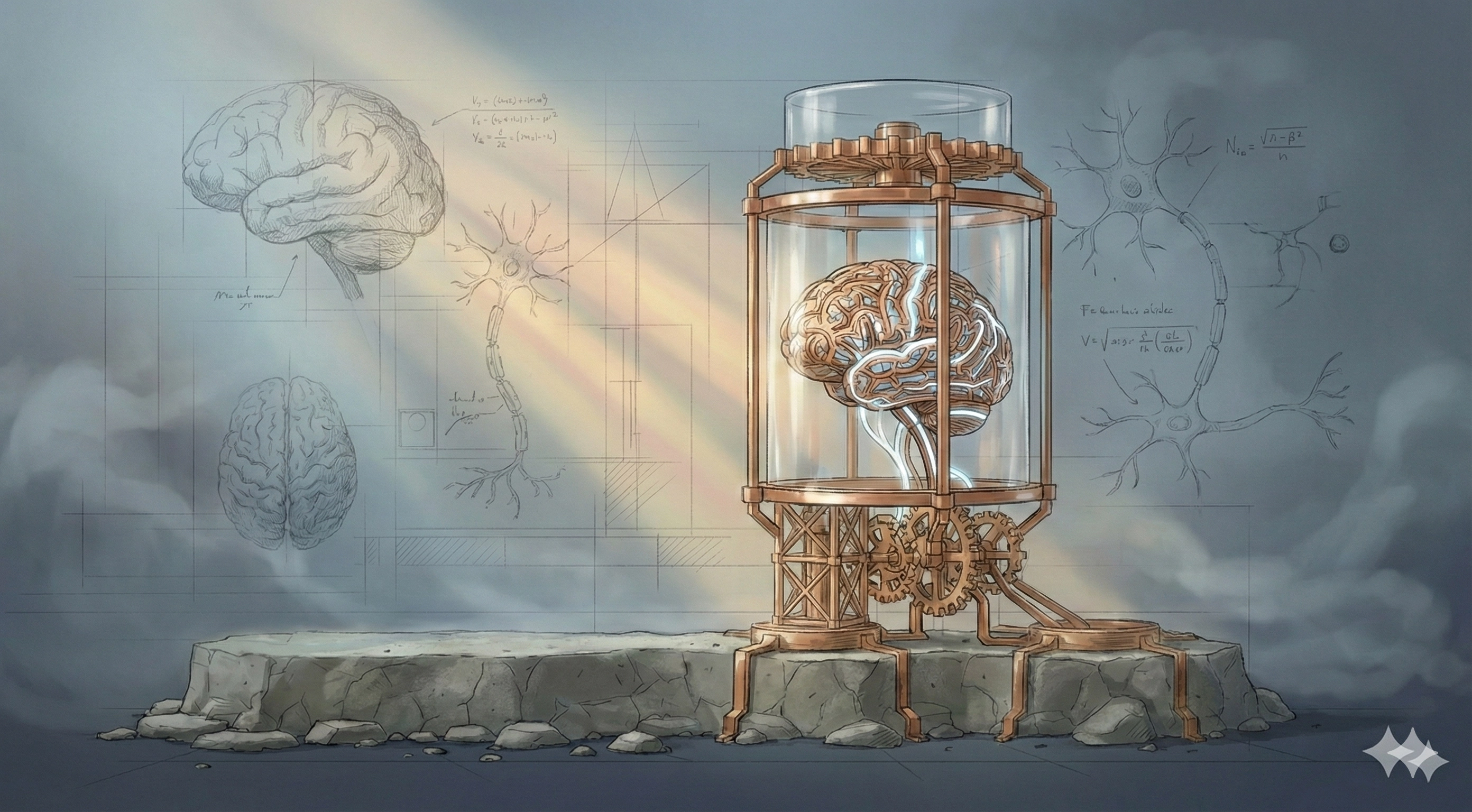

At Tigo Labs, we have equipped Simone with a computational “Theory of Mind” based on proven scientific models.

She builds a structured mental map that represents entities (you, the people around you, your goals, your projects), relationships (hierarchical, collaborative, conflicting, supportive), and attributes (personality traits, values, beliefs, communication preferences).

This representation is not static. It evolves as you converse. Most importantly, it is explainable. Unlike LLMs, whose suggestions emerge from a black box, Simone’s mental map allows you to see where her suggestions come from - what beliefs, relationships and context they are based on.

This transparency is not a feature. It is the foundation of trust.

What this makes possible

This architecture changes what a coaching AI can do.

Recommendations take into account your goals, personality, social environment, and history — not just the last few messages. Simone remembers what you told her six months ago, connects it to your current situation, and detects patterns and shifts. When she suggests that you approach a topic differently with Lucy, it’s not generic advice — it’s a recommendation grounded in Lucy’s personality, your history of interactions, and the organizational context you both operate in. And because the mental map is visible, you can see what Simone believes about your situation — and correct her if she’s wrong.

Representation + reasoning

A complete computational Theory of Mind requires two inseparable components: representation (the structured mental map) and reasoning (the mechanisms that operate on this representation to simulate, predict, and guide).

Simone integrates both. Structured representation allows her to know who you are. Reasoning processes allow her to understand what that implies.

> _

Beyond Language: A Structural Foundation for Trust in AI

Is trust in AI something to be persuaded into, or something to be earned through structure and transparency?

Cognitive AI: The Forgotten Science of Modeling Human Intelligence

Before language models, AI tried to understand how we think