Transparent AI Is Hard. Opaque AI Is Worse.

Most AI hides its reasoning. We made ours visible. Users can see what Simone thinks about them, and sometimes they don't like it. But the gap between self-image and reality is exactly where growth happens.

Marie-Laure Thuret

Chief Product Officer

What happens when AI shows its reasoning? At Tigo Labs, we’re building Simone, an AI coach that makes its understanding visible. Users can see what the AI thinks about them, and sometimes, they don’t like what they see. This article explores why transparency is uncomfortable, why that discomfort is valuable, and why opaque AI is never the answer for systems meant to help people understand themselves.

In a previous post, Margot explored why fluency isn’t understanding. Here’s what happens when you try to make the understanding visible.

During a test session of Simone, a user was going through her first conversation with our AI coach. As she talked, elements appeared on screen: Simone’s real-time understanding of who she was.

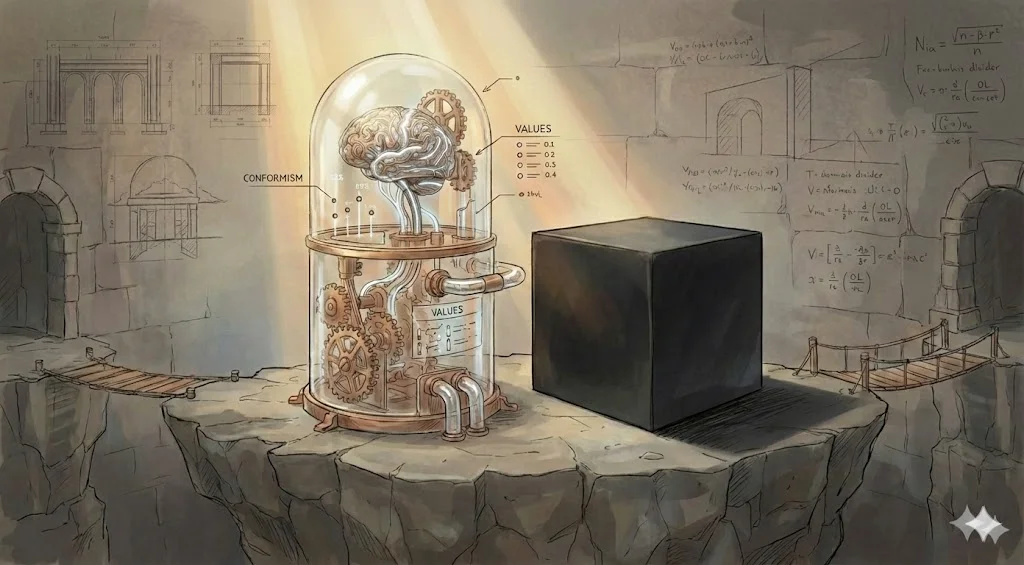

Then Simone surfaced something about conformism being a core value.

The user pushed back. “That’s not what I meant.”

But it was exactly what she meant. She just wasn’t ready to see it written down.

The black box era

We’re in the golden age of AI, and paradoxically, the most opaque AI has ever been.

When you talk to ChatGPT or Claude, there’s an enormous amount you’ll never see. System prompts. Guardrails. Petabytes of training data shaped by unknown assumptions, optimized for unknown outcomes.

This matters when AI summarizes your emails. It matters a lot more when AI is supposed to help you understand yourself.

When AI is meant to help people understand themselves, opacity isn’t just a technical limitation: it’s a fundamental contradiction.

What search taught me

Before Simone, I spent years at Algolia working on search relevancy. Different problem, same tension.

Take re-ranking. When you sell tents online, you also sell accessories: tent pegs, stakes, guy lines. Accessories are cheaper, so they get bought more often. If your algorithm only looks at purchase events, someone types “tents” and gets tent pegs.

Re-ranking fixes this. But here’s the thing: the algorithm now knows something valuable. It knows which products consistently get demoted. That’s not just a search problem. That’s market intelligence about what customers want versus what they incidentally buy.

So we made it visible. We built “shoelace” visualizations showing where products started and where they ended up after re-ranking. These insights lived in analytics because that’s what they were: customer behavior surfaced through search.

Customers didn’t just trust the system more. They gained insights they couldn’t get anywhere else: for marketing, inventory, understanding their audience. The explanation became the feature.

Transparency isn’t just about building trust. When done right, it creates value that wouldn’t exist otherwise.

From products to people

Now I’m building an AI coach. The stakes are completely different.

At Algolia, we explained why sneakers ranked third. At Simone, we’re explaining why the AI thinks conformism is a core value. Or that your relationship with your mother shapes how you approach conflict.

Same principle. Radically higher stakes.

The mirror

We expected users to find this useful. We didn’t expect the intensity of their reactions.

Some loved it. They’d pause, read a card, and go deeper. “Actually, yes, let me tell you more about that.”

Others pushed back. “That’s not what I said.” Sometimes they were right. Simone misunderstood, and they could clarify.

But sometimes Simone was right. The user just didn’t want to see it.

One user had Simone categorize her husband as “mixed,” our way of mapping relationships on a spectrum from ally to obstacle. This made her angry. Not because Simone was wrong. She admitted the label felt accurate. But she didn’t want to see it written down.

Then she had to restart the conversation. And she told us, explicitly, that she changed how she described her husband the second time. She deliberately shaped the narrative so Simone would categorize him differently.

The gaming problem

This raises an obvious concern: doesn’t transparency invite manipulation?

If users can see how Simone understands them, they can game the system. They can lie. Not to Simone, but to themselves, through Simone.

The counter-argument writes itself: keep the reasoning hidden, and users can’t manipulate it.

But this misses the point.

A coaching relationship built on hidden reasoning isn’t coaching. It’s faith. You’re trusting a system you don’t understand to tell you things about yourself you can’t verify.

Yes, users will try to game the conversation. That’s Simone’s problem. Not by hiding her reasoning, but by noticing when someone is performing rather than reflecting. Her job is to gently challenge inconsistencies.

The user who changed how she described her husband? That’s valuable information. The gap between what we want to believe and what we know: that’s where coaching happens.

A black box would have missed this entirely. Transparency revealed the gap between self-image and reality: the exact space where growth happens.

The foundation

At Tigo Labs, transparency isn’t a feature we’ll add later. It’s the foundation.

Every conversation Simone has, every insight she generates: we’re building infrastructure to make the “why” visible. Not because it’s easy. But because it’s the only way to build AI that serves the humans using it.

The glass box isn’t just better product design. It’s a statement about what AI should be.

> _

Beyond Language: A Structural Foundation for Trust in AI

Is trust in AI something to be persuaded into, or something to be earned through structure and transparency?

Cognitive AI: The Forgotten Science of Modeling Human Intelligence

Before language models, AI tried to understand how we think